How Automated Annotation with Synthetic Data Elevates Model Training in Computer Vision

In contemporary computer vision development, the shortage of accurately labeled data remains one of the most persistent bottlenecks. Manual annotation is costly, slow, and prone to inconsistency, consuming over 90% of many project resources. Synthetic image generation combined with automated annotation offers a powerful solution by producing massive volumes of precisely labeled images. This accelerates training, reduces costs, and unlocks access to scenarios hard or impossible to capture in real-world data.

Synthetic Data Generation Methods for High-Fidelity Annotations

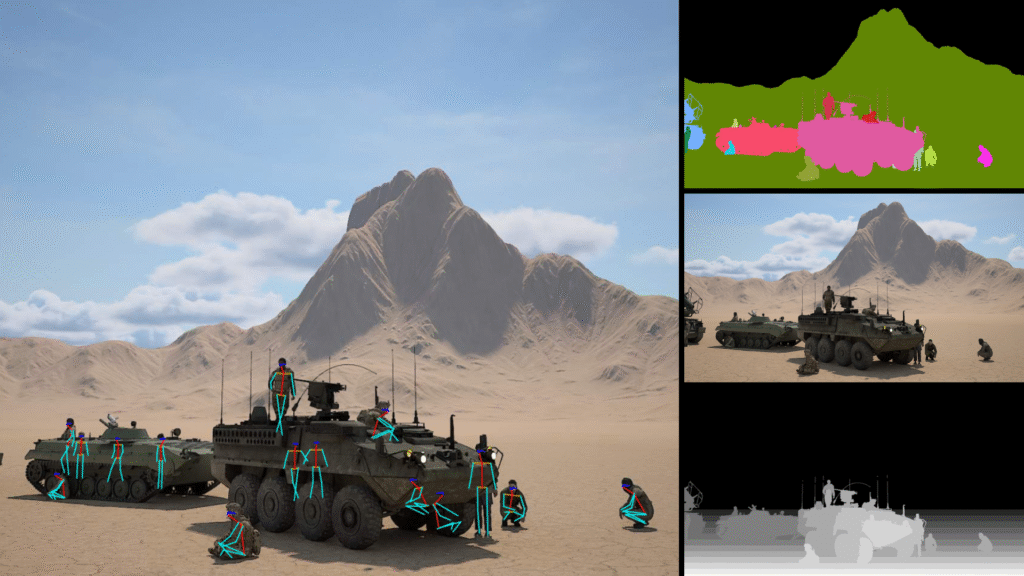

Synthetic data is generated using various techniques and simulation engines that create labeled training examples without relying on manual input. Leading approach in the domaine is a Procedural Engine. Tools like AI Verse Procedural Engine Helios and Gaia create fully rendered environments with lighting, and sensor simulation, enabling vast datasets creation with pixel-perfect annotations such as 3D bounding boxes, depth maps, and classes.

This method enable the rapid creation of diverse, richly annotated datasets tailored for specific computer vision tasks, reducing reliance on expensive and error-prone manual labeling while ensuring scalability and precision.

Core Benefits of Synthetic Image’s Automated Annotation

Synthetic data generation with automated annotation allows computer vision engineers to gain several critical advantages:

- High Label Accuracy and Consistency: Automated annotation eliminates human error and subjective bias, producing precise pixel-level labels indispensable for training high-quality models.

- Complex Annotation Generation: Annotations traditionally expensive or difficult to obtain, such as 3D poses, depth maps, and multi-sensor fusion data (infrared, LiDAR), can be generated efficiently.

- Data Diversity and Scalability: Synthetic datasets can simulate rare, hazardous, or edge-case scenarios at scale, enhancing model generalization and robustness beyond limitations of real-world data collection.

- Accelerated Iteration Cycles: Rapid synthetic dataset regeneration and annotation support agile experimentation, enabling faster model refinement and deployment.

- Bias Mitigation and Data Balancing: Synthetic data can be engineered to better represent underrepresented classes or demographics, addressing imbalance common in real datasets.

Real-World Applications:

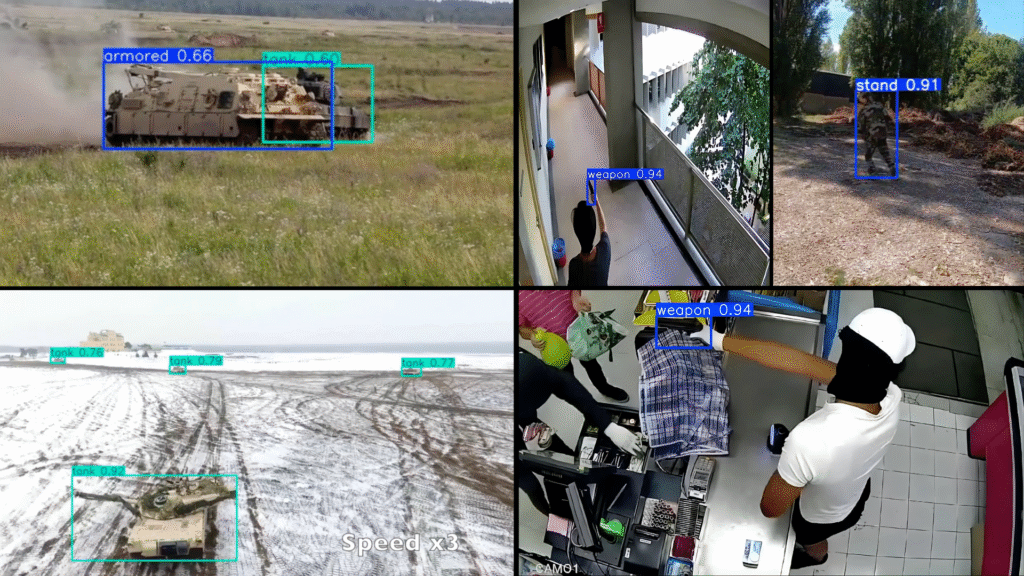

Automated annotation with synthetic data is increasingly critical across multiple computer vision domains:

- Autonomous Systems: Computer vision models for drones rely on synthetic multi-modal datasets combining various inputs to train robust navigation and object detection in diverse flying conditions.

- Counter-Unmanned Aerial Systems (Counter-UAS): Generating diverse aerial threat scenarios synthetically aids in detection, classification, and threat mitigation strategies.

- Surveillance and Security: Comprehensive surveillance datasets enable training of detection and behavioral analysis models under challenging lighting, weather, and occlusion scenarios.

- Robotics: Synthetic environments provide annotated data for robotic navigation, manipulation, and interaction tasks, accelerating development for warehouse automation, inspection, and service robots.

Emerging sectors such as retail analytics and augmented reality also benefit from synthetic annotations, illustrating broad cross-industry relevance.

Industry Trends and Future Advances

The widespread adoption of synthetic data aligns with key 2025 industry trends emphasizing scalable, privacy-conscious AI development:

- Hybrid Training Pipelines: Combining synthetic and real data is now best practice for maximizing model accuracy and robustness, backed by empirical studies showing improved precision and recall metrics.

- MLOps Integration: Synthetic data generation and automated annotation are increasingly integrated into continuous model development pipelines, facilitating rapid dataset updates and iterative tuning.

- Domain Adaptation Research: Techniques to bridge synthetic and real data characteristics reduce distribution gaps, enhancing real-world model transferability.

- Bias and Fairness Initiatives: Synthetic datasets contribute to more balanced and representative AI models, addressing ethical and regulatory requirements.

AI Verse: Elevating Synthetic Image Generation for AI Training

At AI Verse, we harness procedural generation technology to provide high-quality synthetic images tailored specifically for AI training needs. Our proprietary engine enables users to generate fully customizable, pixel-perfect labeled datasets on demand in as little as four seconds per image per GPU. Users control environment settings, lighting, objects, sensors, and more, ensuring datasets precisely match project requirements.

AI Verse’s synthetic images include detailed label types such as classes, instances, depth, normals, and 2D/3D bounding boxes, drastically reducing inaccuracies and human error present in manual annotation. Importantly, our synthetic datasets avoid privacy concerns inherent to real-world data, enabling safer AI training.

Summary

Automated annotation empowered by cutting-edge synthetic data generation techniques enables precise, scalable, and diverse dataset creation that accelerates development, reduces costs, and overcomes the limitations of real data. Its critical role spans autonomous systems, robotics, surveillance, and beyond, positioning synthetic data as an indispensable asset for sophisticated AI applications today and into the future.

AI Verse’s innovative synthetic image solutions stand at the forefront of this advancement, providing powerful, customizable tools designed to meet the highest standards of AI training data quality and efficiency.